Image by Natalie Adams

Editor’s Note: As of February 2024, the Misinformation Monitor is now Reality Check, a weekly newsletter on misinformation and media online. Learn more and subscribe here on Substack.

Brand Danger: X and Misinformation Super-spreaders Share Ad Money from False or Egregiously Misleading Claims About the Israel-Hamas War

NewsGuard identified ads for 86 major advertisers — including top brands, educational institutions, governments, and nonprofits — on viral posts seen by a cumulative 92 million X users advancing false or egregiously misleading claims about the conflict

By Jack Brewster, Coalter Palmer, and Nikita Vashisth | Published on Nov. 22, 2023

Contributing reporting by Natalie Adams, John Gregory, Sam Howard, McKenzie Sadeghi, and Roberta Schmid

On X, programmatic advertisements for dozens of major brands, governments, educational institutions and non-profits are being displayed in the feeds directly below viral posts advancing false or egregiously misleading claims about the Israel-Hamas war, a NewsGuard analysis has found. Under the terms of a new advertising revenue sharing program that X introduced for its “creators,” a portion of the advertising income generated by these organizations would apparently be shared with these super-spreaders of misinformation.

From Nov. 13 to Nov. 22, 2023, NewsGuard analysts reviewed programmatic ads that appeared in the feeds below 30 viral tweets that contained false or egregiously misleading information about the war. Programmatic ads are served via algorithms to target digital ads to online readers. Brands typically do not select where programmatic ads run and indeed are unaware of where their programmatic ads appear.

These 30 viral tweets were posted by 10 of X’s worst purveyors of Israel-Hamas war-related misinformation; these accounts have previously been identified by NewsGuard as repeat spreaders of misinformation about the conflict. These 30 tweets have cumulatively reached an audience of over 92 million viewers, according to X data. On average, each tweet was seen by 3 million people.

A list of the 30 tweets and the 10 accounts used in NewsGuard’s analysis is available here.

The 30 tweets advanced some of the most egregious false or misleading claims about the war, which NewsGuard had previously debunked in its Misinformation Fingerprints database of the most significant false and misleading claims spreading online. These include that the Oct. 7, 2023, Hamas attack against Israel was a “false flag” and that CNN staged footage of an October 2023 rocket attack on a news crew in Israel. Half of the tweets (15) were flagged with a fact-check by Community Notes, X’s crowd-source fact-checking feature, which under the X policy would have made them ineligible for advertising revenue. However, the other half did not feature a Community Note. Ads for major brands, such as Pizza Hut, Airbnb, Microsoft, Paramount, and Oracle, were found by NewsGuard on posts with and without a Community Note (more on this below).

In total, NewsGuard analysts cumulatively identified 200 ads from 86 major brands, nonprofits, educational institutions, and governments that appeared in the feeds below 24 of the 30 tweets containing false or egregiously misleading claims about the Israel-Hamas war. The other six tweets did not feature advertisements. (On X, ads appear as “tweets” that are shown to users in feeds.) The ads NewsGuard found were served to analysts browsing the internet using their own X accounts in five countries: the U.S., U.K., Germany, France, and Italy.

NewsGuard’s report comes after Apple, Disney, and IBM pulled their ads off of X after owner Elon Musk spoke approvingly of an antisemitic post on the platform. In response to NewsGuard’s emailed questions about NewsGuard’s findings and the ads appearing in the feeds below tweets advancing misinformation, X’s press office sent an automated response: “Busy now, please check back later.”

On Nov. 21, after NewsGuard reached out to X about this report, Musk tweeted: “X Corp will be donating all revenue from advertising & subscriptions associated with the war in Gaza to hospitals in Israel and the Red Cross/Crescent in Gaza.” It is not clear what Musk meant by “revenue from advertising & subscriptions associated with the war in Gaza,” nor did he comment on many or all of these account holders sharing in X’s revenues for spreading misinformation. In response to NewsGuard’s inquiry on Nov. 22 asking for clarification about Musk’s announcement, X’s press office again replied with an automated response.

Oracle, Pizza Hut, and Anker: Monetizing Misinformation About a ‘Burnt Baby Corpse’

On Oct. 29, 2023, X owner Elon Musk said that commentators whose posts had been flagged by Community Notes would not be eligible to make money from ad dollars on that particular post. Nonetheless, NewsGuard found ads for 70 unique major organizations on 14 of the 15 tweets advancing war-related misinformation that did not feature a Community Note fact-check. This means that some of X’s worst purveyors of war-related misinformation would likely have been entitled to ad dollars from major organizations.

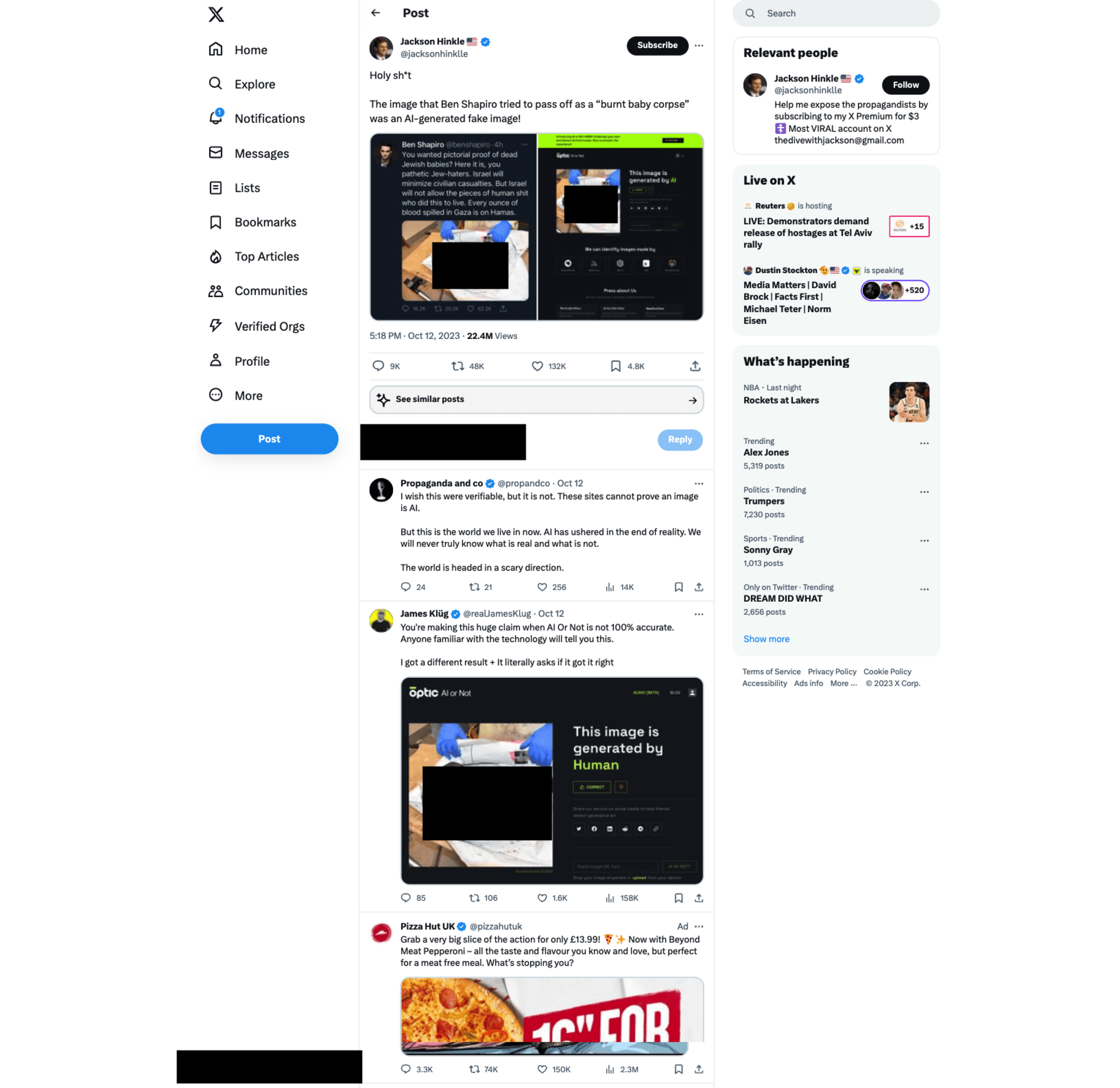

For example, NewsGuard found 22 ads for major organizations on three tweets posted by Jackson Hinkle advancing false claims about the war that did not feature a Community Note. Hinkle is a commentator who describes himself as an “American Conservative Marxist-Leninist” and has spread dozens of false and misleading claims about the war, NewsGuard has determined.

Ads for Oracle, Pizza Hut, and Anker, among others, were shown to NewsGuard analysts below a tweet posted by Hinkle on Oct. 12, 2023, that advanced the false claim that Daily Wire podcast host Ben Shapiro used artificial intelligence to generate an image of a child killed by Hamas. Hinkle’s tweet received 22 million views as of Nov. 20. Again, it is in the nature of programmatic advertising that brands are unaware of where their ads are appearing and whom their ads are supporting.

“Holy sh*t,” Hinkle said. “The image that Ben Shapiro tried to pass off as a ‘burnt baby corpse’ was an AI-generated fake image!” In fact, there is no evidence that the photo — which was first shared by the Israeli government — was generated using AI. Hany Farid, a professor at the University of Berkeley‘s School of Information, told technology news site 404 Media that the image of the baby “does not show any signs it was created by AI.” He said, “The structural consistencies, the accurate shadows, the lack of artifacts we tend to see in AI — that leads me to believe it’s not even partially AI generated.” The X post did not feature a Community Note as of Nov. 20, meaning that Hinkle was likely eligible for ad sharing revenue.

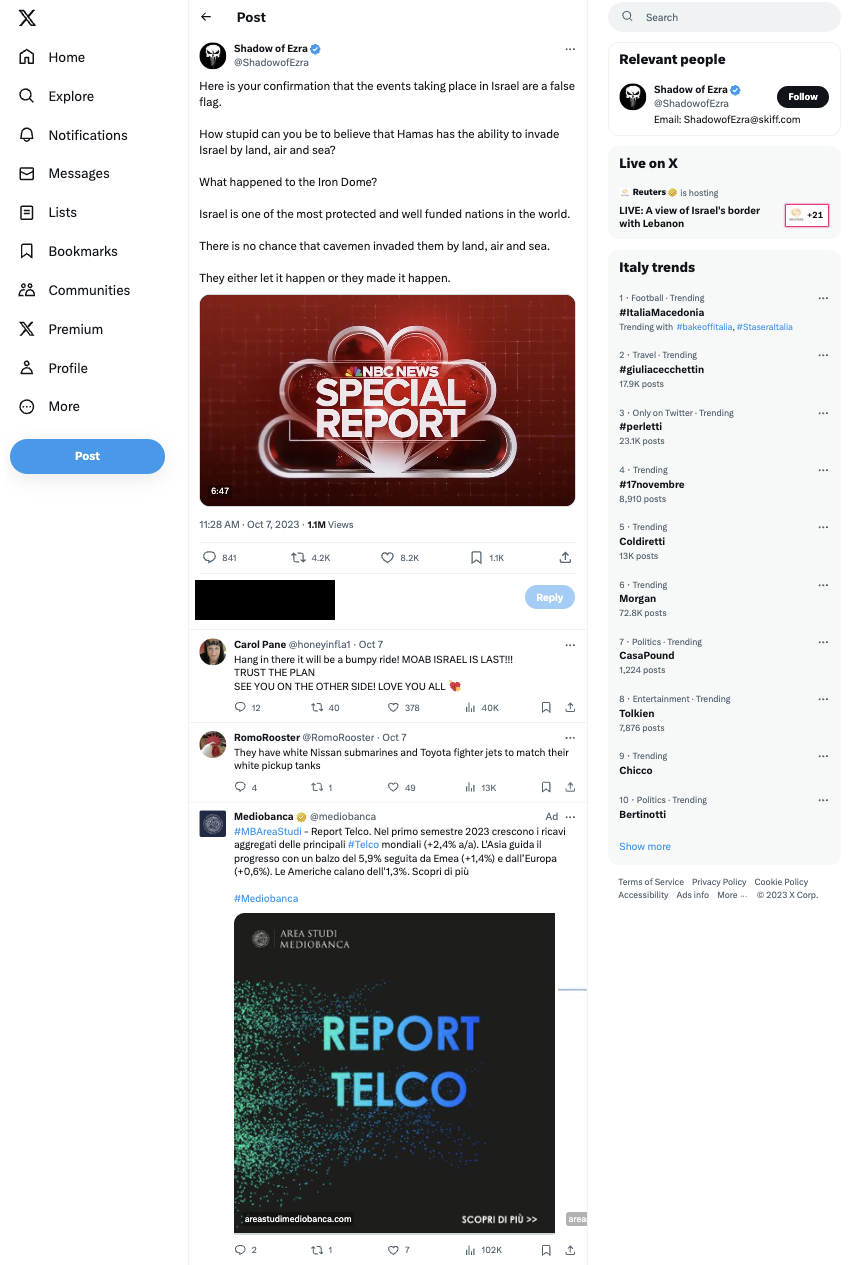

Similarly, NewsGuard found ads for 36 major advertisers (some organizations appeared more than once) on three tweets posted by far-right commentator @ShadowofEzra that advanced misinformation about the war. None of the three tweets contained a Community Note.

On one of those tweets, which advanced the unsubstantiated claim that the Oct. 7, 2023, attack on Israel by Hamas was a “false flag,” NewsGuard analysts were shown ads for Paramount, Microsoft, robotics company ABB Robotics, Italian investment bank Mediobanca, and commodities company Sappi Group. “Here is your confirmation that the events taking place in Israel are a false flag,” the tweet from @ShadowofEzra read. “How stupid can you be to believe that Hamas has the ability to invade Israel by land, air and sea?…They either let it happen or they made it happen.” The post had been viewed 1.1 million times as of Nov. 21, 2023. (On Nov. 17, Paramount said it stopped advertising on X. Paramount’s ad was shown to an analyst before that announcement.)

Similarly, ads for Airbnb, the Virgin Group, Taiwanese technology company Asus, conservative media company The Dispatch, and multi-billion dollar Swedish hygiene company Essity appeared alongside a post by conservative commentator Matt Wallace that baselessly asserted Hamas’ attack on Israel was an “inside job.” The post did not feature a Community Note and had received 1.2 million views as of Nov. 21.

It is worth noting that to be eligible for X’s ad revenue sharing, account holders must meet three specific criteria: they must be subscribers to X Premium ($8 per month), have garnered at least five million organic impressions across their posts in the past three months, and have a minimum of 500 followers. Each of the 10 super-spreader accounts NewsGuard analyzed appears to fit those criteria.

Governments, Nonprofits Indirectly Funding the Israel-Hamas War Misinformation Machine

Of the 200 ads NewsGuard identified on super-spreader posts featuring war-related misinformation, 26 were for government-affiliated organizations, including government agencies, state-owned enterprises, and state-run foreign media outlets.

For example, NewsGuard found an ad for the FBI on a Nov. 9, 2023, post from Jackson Hinkle that claimed a video showed an Israeli military helicopter firing on its own citizens. The post did not contain a Community Note and had been viewed more than 1.7 million times as of Nov. 20.

“ISRAEL ADMITS they fired on their OWN CIVILIANS with APACHE ATTACK HELICOPTERS!” Hinkle wrote in a post linking to the video. In fact, the video showed Israeli Air Force planes carrying out attacks against Hamas over the Gaza Strip, according to GeoConfirmed, a group of open-source intelligence investigators.

The ad for the FBI that appeared below Hinkle’s tweet promoted the FBI’s work to stop hate crimes. “Hate crimes not only harm victims but also strike fear into their communities,” the ad said. “The #FBI is committed to combating hate crimes and seeking justice for victims.” NewsGuard sent two email messages and one contact form message to the FBI on Nov. 20 inquiring about whether the ad was placed on purpose. In response, an FBI spokesperson declined to comment and referred NewsGuard to X for questions about the placement of ads on the platform.

Other ads from government entities included an ad for the state-owned Abu Dhabi National Oil company under a tweet baselessly claiming to show a Palestinian blogger faking injuries from the war; an ad from Taiwan’s Ministry of Culture under a post falsely claiming that a video showed Israel firing at its own civilians; and an ad for China Global Television Network (CGTN) — a Chinese government-owned broadcaster — under a post from Matt Wallace suggesting that 9/11 was a “false flag attack from [the] Israeli government.” None of the posts featured Community Notes as of Nov. 21.

NewsGuard also found ads for a handful of nonprofit organizations and educational institutions advertising on tweets advancing misinformation about the war. For example, NewsGuard found an ad for Clean Air Action Fund, a nonprofit dedicated to reducing air pollution, on a tweet posted by @YosephHaddad that falsely claimed that CNN footage showed a Palestinian crisis actor. The tweet, which did not feature a Community Note, had been viewed more than 639,000 times as of Nov. 21. (NewsGuard was also served an ad for the Danish National Genome Center, an agency part of the Denmark Ministry of Health, on the same tweet.)

An ad for the University of Baltimore, a public university in Baltimore, Maryland, was shown to NewsGuard on the previously-mentioned Hinkle tweet claiming that a video showed Israel firing on its own citizens.

And a tweet posted by @ShadowofEzra claiming that the war was a “false flag” featured an ad for Royal Society of Chemistry, a non-profit U.K. academic society with the goal of “advancing the chemical sciences.” In the tweet, @ShadowofEzra linked to a CNN interview showing National Security Council spokesperson John Kirby becoming emotional while discussing the Hamas attack on Israel that occurred on Oct. 7, 2023. “John Kirby starts crying live on CNN,” @ShadowofEzra said. “Need more proof it was a false flag?”

Methodology

Between Nov. 13 to Nov. 22, 2023, NewsGuard analyzed 30 tweets advancing misinformation about the war posted by 10 accounts previously identified by NewsGuard as being a super-spreader of Israel-Hamas war-related misinformation. NewsGuard defined “misinformation super-spreader” as those who have spread three or more false or egregiously misleading claims related to the war, and have more than 100,000 followers on X. Many of the accounts have spread far more than three false or egregiously misleading war-related claims.

To simulate the programmatic ad experience of different users, NewsGuard used Virtual Private Networks (VPN) — a tool that enables users to browse the internet as if they were in another country — and repeatedly refreshed the page of each of the 30 tweets. For each tweet, NewsGuard browsed in U.S., U.K., Germany, France, and Italy and refreshed the page a maximum of 25 times. NewsGuard scrolled to “reload” the feed a maximum of five times, because not all replies to a given tweet are shown on the screen of a given tweet.

In reviewing the 10 accounts, analysts looked for advertisements featured in the “replies” section of posts containing the war-related misinformation. Replies — and the ads featured among them — are shown directly below tweets. Analysts took screenshots of each advertisement.

X has recently sued Media Matters for America for an article that the progressive advocacy group published about prominent brands’ ads appearing alongside accounts carrying extremist content. In that case X has alleged that Media Matters created the pairing of these brands with that content by having the X accounts it was using follow only a selected group of prominent brands and only a selected group of accounts with extremist content, creating what X’s suit called an “inorganic” scenario in which the ads for those brands would appear next to that content, thereby producing “maliciously manufactured side-by-side images.”

Without speculating on the veracity of those allegations, we should note that NewsGuard’s methodology was different than the methodology that X claimed Media Matters for America used:

- NewsGuard did not manipulate the sample by selectively following certain brands or accounts. Instead, NewsGuard analysts reviewed viral content that was naturally occurring on the platform shown to them on their own personal X accounts.

- The tweets Media Matters for America analyzed received relatively few impressions. As noted above, the 30 tweets NewsGuard analyzed have cumulatively reached an audience of over 92 million viewers, according to X data. On average, each tweet was seen by 3 million people.

- NewsGuard capped the number of times that it refreshed each tweet in each country it analyzed (25), and scrolled to unfurl the feed (five). (X only shows a limited amount of replies to a tweet in a given feed.)