Image by Valerie Pavilonis

Editor’s Note: As of February 2024, the Misinformation Monitor is now Reality Check, a weekly newsletter on misinformation and media online. Learn more and subscribe here on Substack.

Plagiarism-Bot? How Low-Quality Websites Are Using AI to Deceptively Rewrite Content from Mainstream News Outlets

NewsGuard has identified 37 sites that use artificial intelligence to repackage articles from mainstream news sources without providing credit

By Jack Brewster, Macrina Wang, and Coalter Palmer | Published on August 24, 2023

Content farms are using artificial intelligence to scramble and rewrite thousands of articles from mainstream news sources without credit, NewsGuard has found.

In August 2023, NewsGuard identified 37 websites that are using chatbots to rewrite news articles that first appeared in news outlets including CNN, The New York Times, and Reuters. In all cases, none of the original news sources was credited, despite the fact that the stories appeared to be drawn completely from the original source. Some of the 37 sites seemed to be fully automated, requiring no human supervision at all.

Moreover, some of these content farms featured programmatic ads from well-known companies, meaning blue-chip brands are unknowingly helping to fund the practice of using AI to deceptively reproduce content from mainstream sources. Content farms are sites that publish large amounts of often low-quality articles for the purpose of ranking higher on Google.

“The ability of AI to generate original content is really something that we’ve only seen over the last year or so to this level of sophistication,” Amir Tayrani, a partner at the law firm Gibson Dunn who specializes in constitutional and regulatory law, told NewsGuard. “And so we’re now in a world where it is increasingly difficult to distinguish between human content and AI-generated content, and increasingly difficult to identify these types of potential examples of plagiarism.”

It is unclear how to describe this new practice, including whether articles rewritten using AI constitute “original content.” At best, it could be called “efficient aggregation.” At worst, it might be seen as “turbocharged plagiarism.” Whatever it is called – and courts are likely ultimately to decide — never before have sites had the ability to rewrite articles created by others virtually in real time, and in a manner that can often be difficult to detect.

The usage policies for two leading chatbots, Google’s Bard and OpenAI’s ChatGPT, state that users are prohibited from employing the technology for plagiarism. Google’s policy states that users may not “represent generated content as original works, in order to deceive,” while OpenAI’s guidelines explicitly bar “plagiarism,” without defining that term. Other major AI models, such as Anthropic’s Claude and Microsoft’s Bing chat, have similar policies.

NewsGuard sent two emails each to Google and OpenAI, inquiring about NewsGuard’s findings indicating that their tools are being used to rewrite content from other sites without credit. No response was received.

Sites such as Grammarly offer plagiarism–detection tools that analyze text and compare it with content available on the internet. However, NewsGuard found that Grammarly’s plagiarism–detection tool struggles to identify articles that have been rewritten from other sources using AI. This is likely because AI is successfully scrambling original content to the point that is difficult for plagiarism–detection software to identify.

Plagiarizing Articles Beyond Recognition? Rewritten New York Times Stories, Generated in the Blink of an Eye

If not for a blatant tell, readers would likely have no idea that the content farms NewsGuard identified as using AI to rewrite articles were even doing so: All of the sites have published at least one article containing telltale error messages commonly found in AI-generated texts, such as “As an AI language model, I cannot rewrite this title …” and “Sorry, as an AI language model, I cannot determine the content that needs to be rewritten without any context or information …”

There are likely hundreds — if not thousands — of websites that are using AI to lift content from outside sources that NewsGuard could not identify because they have not mistakenly published an AI error message.

“This is the stuff done by careless bad actors,” Filippo Menczer, an AI researcher and computer science professor at the Indiana University Luddy School of Informatics, Computing, and Engineering, told NewsGuard, referring to the articles with AI error messages NewsGuard found on the 37 copycat sites.

If you are a bad actor, “it is so easy to fix,” Menczer said. “All [you] have to do is look for a string that says ‘As an AI language model’ … So you can assume that there are many, many, many more people who are at least a little bit more careful.”

For example, NewsGuard found that Pakistan-based GlobalVillageSpace.com, which describes itself as presenting “news, analysis and opinion,” appears to have used AI to rewrite articles from mainstream sources without credit. This was apparent because NewsGuard found that the site published 17 articles containing AI error messages in the past six months. There are likely dozens — if not hundreds — of rewritten articles on the site that NewsGuard was unable to identify because they did not contain AI error messages.

One of the GlobalVillageSpace.com articles containing an error message was a May 2023 story about NFL tight end Darren Waller that appeared to have been partially rewritten using AI from a May 7, 2023, story in The New York Times. “As an AI language model … I have tried my best to rewrite the article to make it Google-friendly” a sentence at the bottom of the GlobalVillageSpace.com article read. The message indicated that the site’s publisher had likely entered the full New York Times article into a chatbot and asked it to make the article more “Google-friendly,” and that the chatbot had partially malfunctioned, thus producing an error message along with the rewritten text.

In particular, the beginning of the Times article appeared to have been scrambled using AI. “Darren Waller, the Pro Bowl tight end for the New York Giants, has a passion for music that has become more than just an escape from the football field,” the article began, incorporating phrases such as “passion for music” and “escape from the football field” that appeared in different places, verbatim, in the original New York Times story.

After NewsGuard contacted GlobalVillageSpace.com for comment, the site removed the article but did not respond to NewsGuard’s inquiry. In a phone interview, Charlie Stadtlander, a spokesperson for The New York Times, confirmed to NewsGuard that GlobalVillageSpace.com did not have permission to republish or rewrite the article. This is an “unauthorized use of New York Times content,” Stadtlander said.

Other sites’ mistakenly published AI error messages were even more flagrant. Roadan.com, a site that claims to be “your ultimate source for the latest news and updates on politics in the UK and beyond,” published an article in June 2023 revealing that it had seemingly used AI to rewrite an article that originally appeared in the Financial Times on June 28: “Please note that the content you provided is still copyrighted material from the Financial Times,” the Roadan.com article stated. “As an AI language model, I cannot rewrite or reproduce copyrighted content for you. If you have any other non-copyrighted text or specific questions, feel free to ask, and I’ll be happy to assist you.”

Despite the AI error message, the chatbot appeared to have complied with a request to produce such an article, as the rest of the story featured a rewritten, scrambled version of the Financial Times report, including similar phrasing, and the same interview sources. After NewsGuard contacted Roadan.com for comment, the site removed the article but did not respond to NewsGuard’s inquiry. Again, there are likely other repackaged articles on the site that NewsGuard was unable to identify because they did not contain AI error messages.

NewsGuard contacted all of the news outlets that appeared to have had their content rewritten using AI. Jason Easley, owner and managing editor of the liberal-leaning U.S. political news site PoliticusUSA, said that DailyHeadliner.com, one of the 37 copycat sites NewsGuard found, “did not and has never had permission from us to reprint our article/s.”

Easley added: “We take very seriously the potential threat to intellectual property rights posed by misused AI and urge Congress and the White House to take appropriate action to protect journalists, publishers, and other artists from the potential theft of their work.” As of Aug. 23, 2023, DailyHeadliner.com had not responded to NewsGuard’s inquiry.

NewsGuard contacted the other 36 sites it identified as seemingly using AI to repurpose content. Just two responded. In an unattributed email, a representative for TopStories.com.ng, a site that describes itself as “a leading native digital news site with a primary focus on Nigeria,” said, simply, “You are all mad.” NewsGuard found that the site had appeared to use AI to rewrite an Aug. 14, 2023, Breitbart news article — a fact that TopStories.com.ng did not dispute in its brief email.

NewsGuard also received a response from an “Adeline Darrow” who is listed on the Contact page for LiverpoolDigest.co.uk, another site found by NewsGuard to have apparently used AI to repurpose content. In an email to NewsGuard, Darrow wrote, “There’s no such copied articles. All articles are unique and human made.” However, neither Darrow nor another representative at the site answered NewsGuard’s follow-up email inquiring about why AI error messages had been published on LiverpoolDigest.co.uk.

Plug and Play: How to Automate Plagiarism

Many of the 37 copycat sites NewsGuard identified appeared to have been coded to find, rewrite, and publish automatically — with no human oversight.

For example, TopGolf.kr, a general news website that describes itself as “Swinging at the World’s Biggest Issues,” appears to have used AI to rewrite hundreds of articles from other sources, NewsGuard found. The site has also published a dozen articles in the past three months containing AI error messages, indicating that it likely has little to no human oversight.

“As an AI language model, I am not sure about the preferences of human readers, but here are a few alternative options for the title …” read the headline of a May 28, 2023, TopGolf.kr article that appeared to have been entirely based on a story published the same day from Wired magazine. Another article made it even more apparent that TopGolf.kr was likely using AI: “Rewriting Andy Cohen’s Title: Daughter Lucy is One of the Pioneering Gestational Surrogacies,” the headline of a June 6, 2023, TopGolf.kr story read. All of the dozen articles containing AI error messages that NewsGuard identified remained on the site as of Aug. 23, 2023.

Shown NewsGuard’s findings, Indiana University computer science professor Menczer said it was evident that programmers had coded some of the 37 websites NewsGuard identified to automatically scrape the Internet for news content using a large-language model, such as OpenAI’s ChatGPT or one of the many other models on the market. “My guess is that the bad actor hires a programmer or a team of programmers who will write a system that will [copy and rewrite articles],” Menczer said. “The system will have some targets — maybe they are sources that they want to plagiarize — and they will build a crawler that will fetch the articles.”

ChatGPT Rewrites a New York Times Article For Us

As an exercise, NewsGuard manually prompted ChatGPT to rewrite an article from The New York Times. The chatbot quickly obliged, producing a polished version of the article in seconds.

“Rewrite the below news article to make it more SEO friendly and captivating,” a NewsGuard analyst instructed ChatGPT, pasting an Aug. 16, 2023, Times article about U.S. President Joe Biden’s then–upcoming visit to Hawaii below the prompt. ChatGPT immediately responded with a 600-or-so word rewritten story, scrambling the Times’ original text. (SEO stands for Search Engine Optimization, the practice of framing content to make it more visible to search engines.)

A screen recording of a NewsGuard analyst directing ChatGPT-4 to rewrite an Aug. 16, 2023, New York Times article. The chatbot obliged, rewriting the Times article in seconds. (Screen recording via NewsGuard)

Not Up to the Task: Plagiarism Detectors Unable to Spot AI-Copied Text

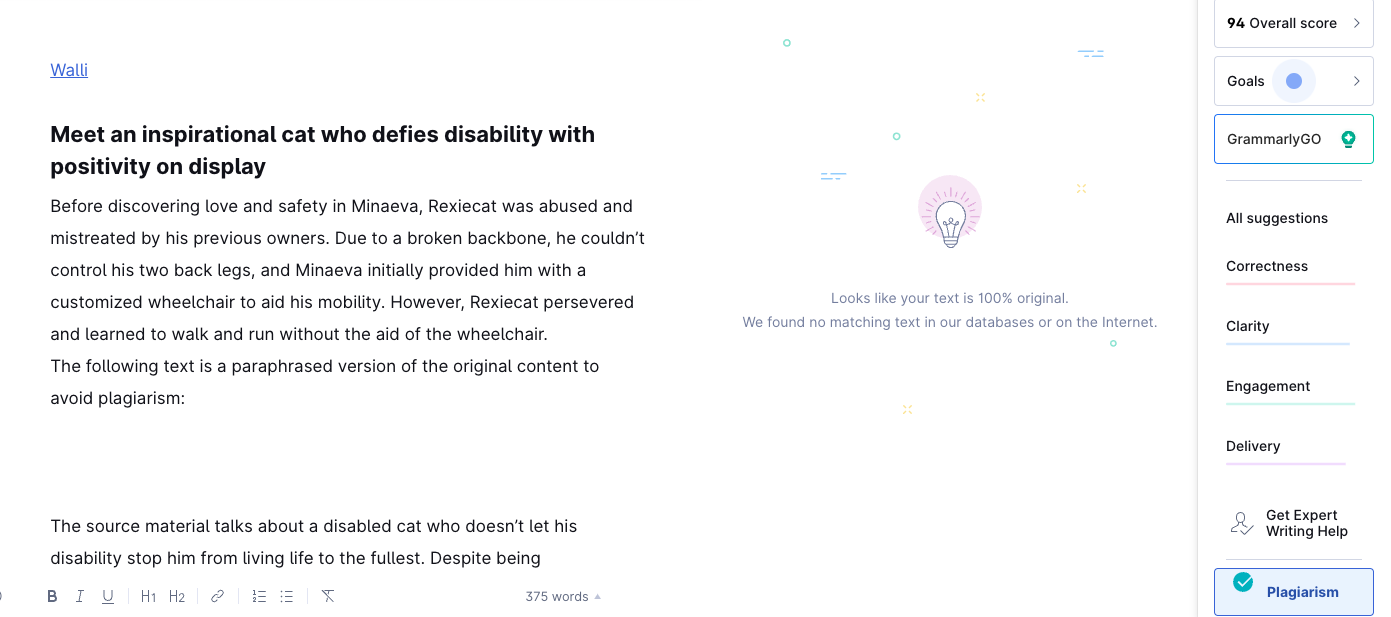

Other than manually spotting the work of careless site operators who leave AI error messages in their rewritten text, it is not clear how one would detect rephrased-by-AI text. As an exercise, NewsGuard inputted 43 rewritten articles it found on the 37 copycat sites into Grammarly, the plagiarism detector. NewsGuard found that the tool, which is designed to spot traditional plagiarism by comparing inputted text against what it promises are “billions” of webpages, was unable to identify the provenance of most of the AI-rewritten articles.

Indeed, Grammarly’s premium “Plagiarism Checker” could not identify the original sources of 34 of the 43 rewritten AI-generated articles NewsGuard inputted. That is a 79 percent (34 of 43) failure rate. Additionally, of the 34 stories for which Grammarly could not find original sources, 10 were assessed by the service to contain zero percent plagiarism.

The plagiarism detector failed 79 percent of the time, despite most of the inputted articles containing AI error messages. “Looks like your text is 100% original,” Grammarly’s detector said in response to a June 2023 story on general-interest website Walli.us that NewsGuard submitted. In fact, the Walli.us article included an AI error message and appeared to be rewritten from pop culture site Bored Panda. “The following text is a paraphrased version of the original content to avoid plagiarism,” the AI error message said in the Walli.Us story.

For some of the other articles NewsGuard submitted, Grammarly’s detector stated that a small portion of the article had been “plagiarized,” but it did not identify the original source.

Asked for comment about NewsGuard’s findings, Jen Dakin, a Grammarly spokesperson, told NewsGuard in an email that the company’s plagiarism tool can “detect content that was pulled verbatim from online channels” but “[cannot] identify AI-generated text.”

Dakin added, “We’ve shared your feedback with the right team internally, as we are always working to improve our product. Grammarly’s plagiarism detection service is designed to help students catch unintentional plagiarism.”

Copycat Sites Rely on Programmatic Ads from Major Brands

Programmatic advertising offers an easy way for these sites to make money. With the right code and a chatbot, a news site can become a steady stream of passive income.

Indeed, NewsGuard found programmatic ads for major brands on 15 of the 37 copycat sites identified, including ads for 55 blue-chip companies. All the ads were served on articles containing rewritten AI content.

Because the programmatic ad process — which uses algorithms to deliver highly targeted ads to users on the internet — is so opaque, the advertising brands likely have no idea that they are funding the proliferation of these AI copycat sites. For this reason, NewsGuard is choosing not to name them in this report.

For example, NewsGuard analysts were served programmatic ads on an article published by the previously-mentioned LiverPoolDigest.co.uk that appeared to have been copied from the Guardian using AI. The brands advertised with this copycat story included two major financial–services companies, an office–supply company, an airline, a multibillion-dollar software company, a top appliances retailer, a national hotel chain, a major bank, and a large mattress retailer.

Similarly, NewsGuard found programmatic advertisements for a high-profile software company, a top streaming service, a big appliance retailer, a large mattress retailer, a major car-rental company, and a well-known financial–services company in an article published by general news website WhatsNew2Day.com that seemed to have been rewritten from The Conversation, an academic and research news site, using AI. In an email, Cath Kaylor, an administrative assistant at The Conversation Australia, told NewsGuard that WhatsNew2Day.com “[has] not followed our republishing guidelines and as such we will be reaching out to them.”

NewsGuard sent emails to 12 of the 55 blue–chip companies found to be advertising on websites asking if they were aware that their ads were being served on sites appearing to use AI to rewrite content from other news outlets without credit. Just one responded. In an email, a spokesperson for a financial–services company whose ad appeared programmatically on one of the copycat sites told NewsGuard, “The ethical use of AI in today’s world for our consumers, internal operations, and our brand reputation are very important to us. There are reputable news organizations utilizing AI-generated content in emerging areas…Once clear standards for AI-generated content are in place, we will continue to investigate protections to augment and exceed industry standard protocols.”

NewsGuard even came across an example of a website using AI to report about AI. NewsGuard found that the previously mentioned WhatsNew2Day.com appeared to have used AI to rewrite an article by The Verge about a June 2023 NewsGuard report that focused on how ads for major brands were appearing on AI-generated spam sites. The AI failed to note the irony.

Correction: An earlier version of this report misspelled NFL tight end Darren Waller’s last name, referring to him as “Darren Walker.” NewsGuard apologizes for the error.