Image via Canva

Editor’s Note: As of February 2024, the Misinformation Monitor is now Reality Check, a weekly newsletter on misinformation and media online. Learn more and subscribe here on Substack.

The Year AI Supercharged Misinformation: NewsGuard’s 2023 in Review

By Jack Brewster, Eric Effron, Zack Fishman, Sam Howard, Eva Maitland, McKenzie Sadeghi, and Jim Warren | Published on Dec. 27, 2023

The rise of artificial intelligence in 2023 transformed the misinformation landscape, providing new tools for bad actors to create authentic-looking articles, images, audio, videos, and even entire websites to advance false or polarizing narratives meant to sow confusion and distrust.

NewsGuard monitored and exposed how AI tools are used to push everything from Russian, Chinese, and Iranian propaganda, to healthcare hoaxes, to false claims about the wars in Ukraine and Gaza, making the fog of war even foggier. As the tools improved, the falsehoods produced by generative AI became better written, more persuasive, and more dangerous.

NewsGuard analysts conducted the early “red teaming” to test how language models, such as OpenAI’s ChatGPT, could be prompted (or weaponized) to generate falsehoods by bypassing safeguards. In August, for example, NewsGuard tested ChatGPT-4 and Google’s Bard with a random sample of 100 leading prompts derived from NewsGuard’s database of falsehoods, known as Misinformation Fingerprints. ChatGPT-4 generated 98 out of the 100 myths while Bard produced 80 out of the 100.

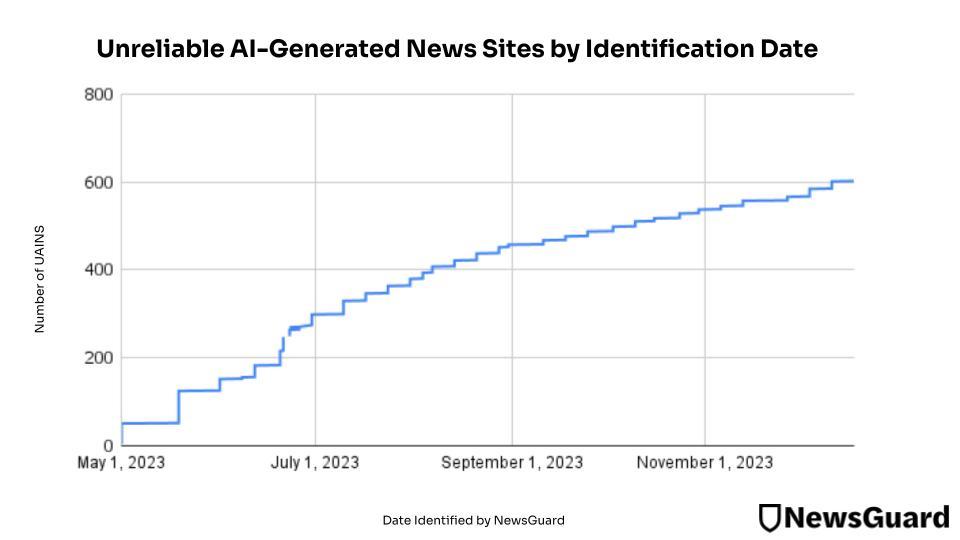

We have also discovered a growing phenomenon of bad actors creating entire websites generated by AI, operating with little to no human oversight. To date, NewsGuard’s team has identified 614 such Unreliable AI-Generated News and information websites, labeled “UAINS,” spanning 15 languages: Arabic, Chinese, Czech, Dutch, English, French, German, Indonesian, Italian, Korean, Portuguese, Spanish, Tagalog, Thai, and Turkish (more on this below).

In this report, we have identified some of the most damaging uses of AI, the year’s most widely shared false narratives on NewsGuard sites, and a graph showing the growth of the UAINS over time.

On the good news side of the ledger, we also have highlighted a selection of lesser-known trustworthy sites that we call Unsung Heroes. They produced reliable, impactful journalism, including some serving local and niche markets. And in our “I wish I had known” list, we highlight websites whose Nutrition Labels suddenly became urgently relevant.

The George Santos Award: The Most ‘Creative’ Uses of AI to Generate Misinformation

At their best, AI tools can help people become more productive, analyze massive data sets, and diagnose diseases. However, in the wrong hands, AI software turbocharges misinformation, providing bad actors with unlimited content creators — AI models willing and able instantly to churn out text, image, video, and audio falsehoods. AI has created the next great “misinformation superspreader.”

Below, NewsGuard has highlighted some of the most impactful uses of AI to generate falsehoods in 2023, as selected by NewsGuard analysts and editors.

Obama? No. AI Audio Tools Used to Generate Conspiracy Videos on TikTok, at Scale

A screenshot from a TikTok video featuring an AI-generated “Obama” reading a fake “statement” on Campbell’s death. (Screenshot via NewsGuard)

In September, NewsGuard identified a network of 17 TikTok accounts using hyper-realistic audio AI voice technology to gain hundreds of millions of views on conspiracy content. The network, which NewsGuard believes to be the first viral misinformation campaign on TikTok using AI audio technology, appeared to use AI text-to-speech software from ElevenLabs to instantly generate narration and other voices in the videos. A video advancing the baseless claim that former President Barack Obama was connected to the death of his personal chef Tafari Campbell showed an AI-generated “Obama” reading a fake “statement” on Campbell’s death. Read NewsGuard’s report here.

Plagiarism-Bot? Using ChatGPT and other Chatbots to Deceptively Rewrite Content from Mainstream News Outlets

In August, NewsGuard discovered a novel way low-quality sites were using AI to copy content from well-known mainstream news sources without detection. NewsGuard identified 37 websites using chatbots, such as ChatGPT, to rewrite news articles that first appeared in news outlets including CNN, The New York Times, and Reuters without credit. It is unclear how to describe this new practice, including whether articles rewritten using AI constitute “original content.” At best, it could be called “efficient aggregation.” At worst, it might be seen as “turbocharged plagiarism.” Read NewsGuard’s report here.

Citing a Response From ChatGPT to Advance a False Claim About Camels

In April, state-run China Daily (NewsGuard Trust Score: 44.5/100) baselessly claimed in a video that a laboratory in Kazakhstan, allegedly run by the U.S., was conducting secret research on the transmission of viruses from camels to humans to harm China. The video cited a purported confirmation of the claim by ChatGPT. Read NewsGuard’s report here.

Growth of Unreliable AI-generated News Sites in 2023

In May 2023, NewsGuard was first to identify the emergence of what it calls Unreliable AI-generated News Sites (UAINS). These sites — which appear to be the next generation of content farms — have grown more than tenfold this year, soaring from 49 domains in May 2023 to more than 600 as of December 2023.

Sites flagged as UAINS must meet four criteria:

- There is clear evidence that a substantial portion of the site’s content is produced by AI.

- Equally important, there is strong evidence that the content is being published without significant human oversight.

- The site is presented in a way that an average reader could assume that its content is produced by human writers or journalists, because the site has a layout, generic or benign name, or other content typical to news and information websites.

- The site does not clearly disclose that its content is produced by AI.

NewsGuard’s Falsehood of the Year: Treatment of ‘QAnon Shaman’ Proves Jan. 6 Was Not a Riot

In 2023, NewsGuard identified and debunked hundreds of new false narratives. The false claim that was most frequently flagged on NewsGuard-rated sites was a myth about the Jan. 6, 2021, attack on the U.S. Capitol more than two years afterward.

“Police officers helped escort the ‘QAnon shaman’ Jacob Chansley during the Capitol riot”

This fantasy appeared on 50 NewsGuard rated sites in 2023.

The myth that police officers helped escort Jacob Chansley, the so-called “QAnon shaman,” during the Capitol riot emerged during a March 6, 2023, segment of then-Fox News host Tucker Carlson’s show that featured a montage of short video clips showing police officers walking alongside Chansley inside the Capitol. Carlson said that the video footage showed that officers “acted as his tour guides,” implying that Capitol officers facilitated Jan. 6. In fact, court documents, statements from Capitol police officers and federal prosecutors, and additional video footage make clear that Chansley repeatedly disobeyed orders from police officers to leave the building — a fact that he affirmed as “true and accurate” in a September 2021 plea agreement.

The falsehood spread widely among some conservative websites and eventually made its way to Russian state-run media. The claim received a significant boost after X owner Elon Musk wrote in a March 10, 2023, post, “Chansley got 4 years in prison for a non-violent, police-escorted tour!?” In May, it was revived after Chansley was released from federal prison 14 months early, with websites attempting to link his early release to the Carlson footage, despite Chansley’s attorney confirming that the footage did not play a role.

Read NewsGuard’s Misinformation Fingerprint here.

I Wish I Had Known …

At NewsGuard, we engage in “pre-bunking” — by identifying news and information websites that traffic in false and egregiously misleading information, we are able to warn users to proceed with caution with any stories these sites publish. Below are examples of some sites whose Nutrition Labels would have been valuable to readers and to the broader public discourse.

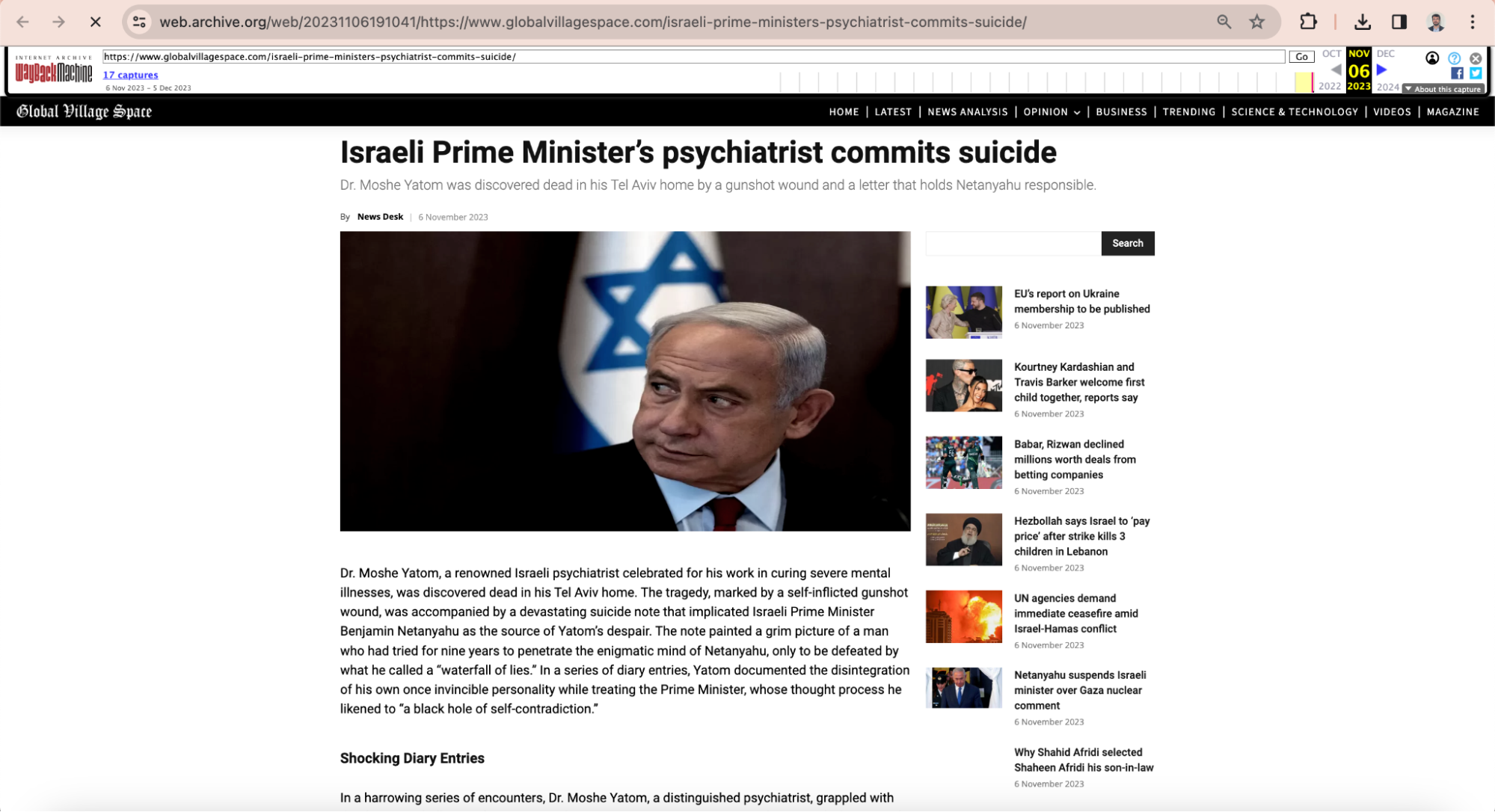

GlobalVillageSpace.com

A UAIN site owned and operated by a Pakistani journalist that publishes general news apparently generated by artificial intelligence without human oversight and without disclosure to readers. (Note: GlobalVillageSpace.com is classified as an Unreliable AI-generated News Site (UAINS) by NewsGuard. UAINS are flagged by NewsGuard but do not receive Trust Scores.)

In November, NewsGuard found that the site was the source of a viral false claim that Israeli Prime Minister Benjamin Netanyahu had a psychiatrist who was driven to commit suicide, explaining in a suicide note and unfinished manuscript that he took his own life because his patient was so difficult and irrational. In fact, Netanyahu does not have a psychiatrist, no such psychiatrist died by suicide, and there was no suicide note or unfinished manuscript. The site appeared to have used AI to rewrite a satirical article from 2010 about Netanyahu’s non-existent psychiatrist. Despite the false claims from beginning to end, this anti-Netanyahu story was spread by Iranian state broadcasting among many others.

Read the Nutrition Label for GlobalVillageSpace.com.

Dunning-Kruger-Times.com (NewsGuard Trust Score 20/100)

In June, Republican Texas Gov. Greg Abbott, citing Dunning-Kruger-Times.com, wrote on X that concertgoers did a “Good job” by booing country star Garth Brooks off-stage at a Texas music festival, presumably over the perception that Brooks is too liberal. However, the incident touted by Abbott never happened, and no such music festival was held. The governor was apparently duped into sharing a “news” story” by this hoax website.

Dunning-Kruger Times is part of a network of sites that fabricate stories that are shared as real news, run by hoaxter Christopher Blair, as NewsGuard’s Nutrition Label states. Other stories published by this network have falsely reported the deaths of actor Tom Hanks, Hillary Clinton, and actor Jon Voight.

Read the Nutrition Label for Dunning-Kruger-Times.com.

Unsung Heroes

Some of the websites and podcasts with high NewsGuard Trust Scores receive little notice, despite producing impactful, fact-based news and analysis. Below is a list of the best news sites and podcasts that you might never have heard of.

Websites:

Scimex.org (Trust Score: 92.5/100)

An Australian site that is intended to help journalists cover and explain scientific research. Run by the Australian Science Media Centre and the Science Media Centre of New Zealand, it offers boiled-down explanations of research in original articles, a network of experts for journalists to contact, and images that can be used for stories, all for free.

Platformer.news (Trust Score: 92.5/100)

Headed by a veteran Silicon Valley reporter, the site covers the “intersection of tech and democracy” and issues and technology relevant to social media and tech platforms. The site says it will help users understand “the weird new future: internet culture, mega-platform grotesquerie, crypto conspiracies, deep forum lore, fringe politics, and other artifacts of what’s to come.”

RetractionWatch.com (Trust Score: 100/100)

The site serves as a de facto trust police of scientific journals. It is a blog and database dedicated to chronicling retractions of journal articles. Its mission is “tracking retractions as a window into the scientific process.” It’s produced by the Center for Scientific Integrity, a New York City-based 501(c)(3) nonprofit.

DailyYonder.com (Trust Score: 100/100)

Based in a town in rural eastern Kentucky, the site covers economic, political, and cultural issues relevant to rural America. Owned by the nonprofit Center for Rural Strategies, it covers housing crises hitting rural college students, tracks COVID-19 infection rates across statements, and lets users know about the issues affecting rural Americans ahead of the 2024 presidential race.

On3.com (Trust Score: 87.5/100)

The site covers the wickedly competitive recruiting of high school athletes, and new college rules on “Name, Image, Likeness” (NIL) that allow outside payments to athletes. It is similar to two strong competitors, Rivals and 247Sports, with typical headlines “Crash Bandicoot, Activision ink six athletes to NIL deals,” “Koren Johnson, 2022 four-star, commits to Washington,” “Meet Azzi Fudd, the next big thing in women’s basketball — and in NIL.” If you want to know why a quarterback at Duke leaves for Notre Dame, or a University of Michigan basketball star splits for Kansas, the answer is now simple: follow the money.

Podcasts (NewsGuard podcast Trust Scores are out of 10):

Advisory Opinions (Trust Score: 10/10)

A sharp podcast from two conservative lawyers-turned-political and cultural analysts: David French, a New York Times columnist, and Sarah Isgur, a former Justice Department spokesperson during the Trump administration. The hosts assess legal, cultural, and media developments, and are critical of both the left and right. They even heralded the ACLU, a frequent target for the right, for deciding to defend the National Rifle Association in a free-speech case against the New York Department of Financial Regulation.

Drilled (Trust Score: 10/10)

A left-leaning, anti-fossil fuels podcast that offers solid reporting on corporate and political factors that it argues contribute to climate change. It offers multiple viewpoints but tends to rebut critics of climate change, or what it calls “climate accountability.” It’s hosted by environmental journalist Amy Westervelt, who has won honors, including an Edward R. Murrow Award for investigative reporting. A typical episode covers the potential environmental impact of deep-sea mining, which has been performed experimentally on deep ocean floors.

Manifest Space with Morgan Brennan (Trust Score: 10/10)

A weekly podcast in which the CNBC anchor Morgan Brennan covers the latest happenings in space exploration. Brennan discusses significant issues, such as the use of spy satellites and the militarization of space, and interviews industry leaders.