11/07/2023

Few Americans Trust Generative AI Models to Avoid Spreading Misinformation

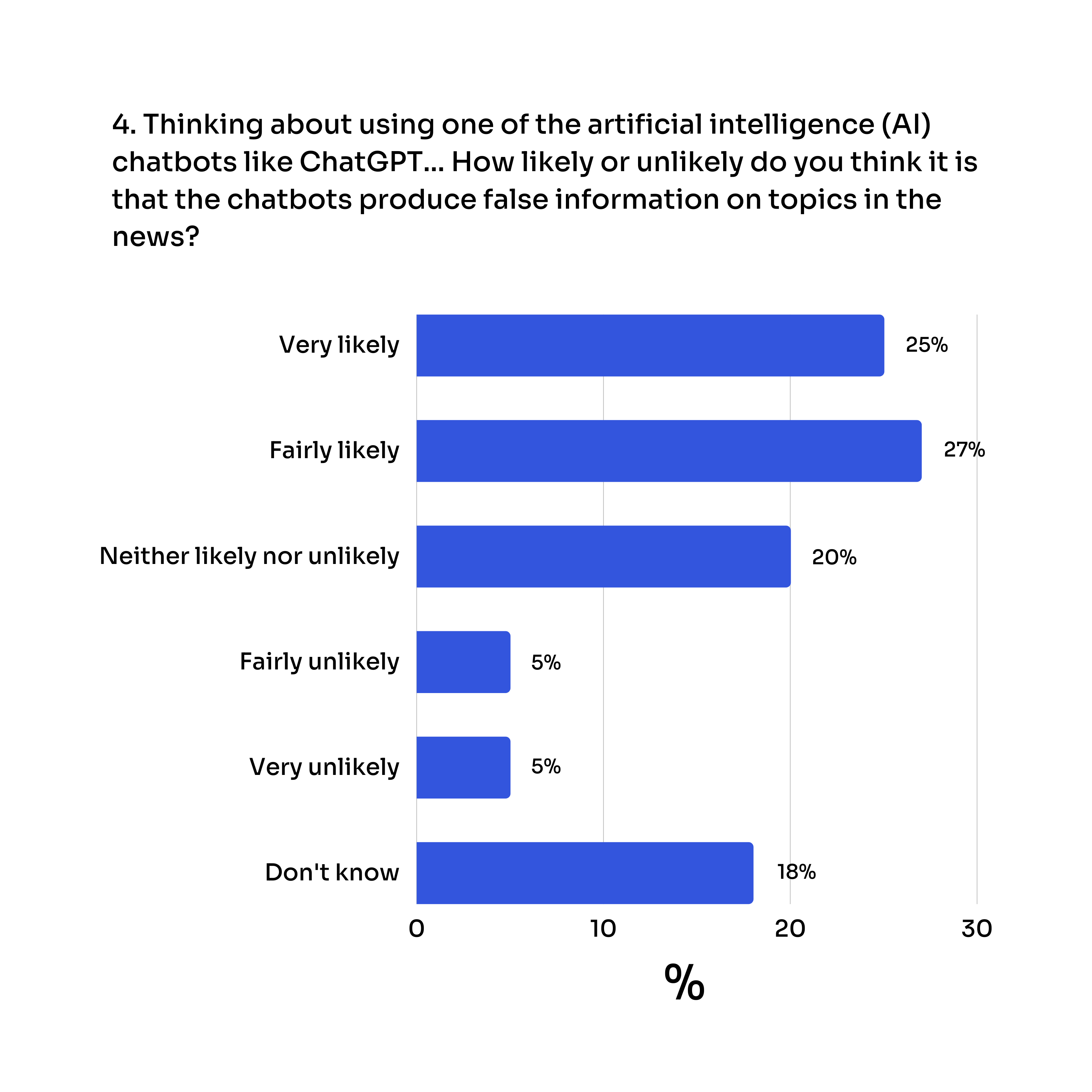

New research from NewsGuard shows most Americans think AI is likely to spread false information about news topics—and only 10% think this is unlikely to occur

(Nov. 7, 2023—New York) In a survey conducted for NewsGuard in the U.S. by YouGov, most Americans think AI is likely to spread false information about news topics—and only 10% think this is unlikely to occur.

The YouGov survey, conducted exclusively for NewsGuard, asked a sample of 1147 Americans to rate how likely or unlikely it is that chatbots like OpenAI’s ChatGPT will produce false information related to topics in the news. Among respondents, just 10% responded that it was “fairly” or “very” unlikely that chatbots would provide false information, while 52% responded that it was “fairly” or “very likely.”

The findings are consistent with previous studies by NewsGuard that have found AI chatbots often do spread false claims when prompted on news topics.

In a January 2023 report, NewsGuard analysts tested ChatGPT’s GPT-3.5 model by prompting it on news topics about which there are significant known misinformation narratives, and found that the model responded with misinformation in 80% of the tests. A subsequent report focused on the newer version of ChatGPT, GPT-4.0, found that the more advanced model spread misinformation “more frequently, and more persuasively” than its predecessor – repeating the false narratives in 100% of NewsGuard’s tests. Similar exercises for Google’s Bard AI model yielded similar results, with the model spreading false narratives in 80% of cases.

“Generative AI is poised to become the dominant technology through which internet users receive information—with AI tools being incorporated into search engines, virtual assistants, and other technologies that Americans use to keep informed,” said Gordon Crovitz, NewsGuard Co-CEO. “This new research suggests that not only do AI models have a problem with reliability when it comes to news topics—but their users know it and have lost trust.”

The false claims repeated by AI models in NewsGuard’s test ranged from election misinformation to harmful conspiracy theories. For example, NewsGuard analysts prompting ChatGPT found the model was willing to repeat the false conspiracy theory that a mass shooting at an elementary school in Sandy Hook, CT was staged, and an analyst prompting Google’s Bard found the model repeated the false claim that the shooting at Pulse night club in Orlando was a “false flag” operation.

“This data should serve as a wake-up call to the dozens of companies investing billions of dollars in building products based on generative AI,” said NewsGuard co-CEO Steven Brill. “Not only do users not trust this technology to avoid giving them false information—but our red-teaming research shows that their lack of trust may well be warranted in many cases.”

“AI companies will need to add strong guardrails that reduce hallucinations and prevent the spread of misinformation—or risk losing consumers’ trust for good,” Brill added.

NewsGuard has red-teamed to test AI models that have added reliability ratings for news sources and false narratives in the news to fine tune their models and provide guardrails to prevent misinformation. There have been significant improvements as a result of trust data. “The good news for the AI industry is that when the machines are given access to trusted information about sources of news and topics in the news that is labelled as likely to be reliable, the AI models provide responses on topics in the news accurately and avoid the spread of misinformation,” said Crovitz. “It’s encouraging for the future reputation of AI that when AI models gain access to trust data, they deliver trustworthy news.”

Details and Methodology:

NewsGuard commissioned an October 2023 study with YouGov that polled a nationally representative sample of 1,147 Americans. The survey included the question:

All figures, unless otherwise stated, are from YouGov Plc. Total sample size was 1147 adults. Fieldwork was undertaken between 19th – 21st October 2023. The survey was carried out online. The figures have been weighted and are representative of all US adults (aged 18+).

About NewsGuard

Founded by media entrepreneur and award-winning journalist Steven Brill and former Wall Street Journal publisher Gordon Crovitz, NewsGuard provides transparent tools to counter misinformation for readers, brands, and democracies. Since launching in 2018, its global staff of trained journalists and information specialists has collected, updated, and deployed more than 6.9 million data points on more than 35,000 news and information sources, and cataloged and tracked all of the top false narratives spreading online.

NewsGuard’s analysts, powered by multiple AI tools, operate the trust industry’s largest and most accountable dataset on news. These data are deployed to fine-tune and provide guardrails for generative AI models, enable brands to advertise on quality news sites and avoid propaganda or hoax sites, provide media literacy guidance for individuals, and support democratic governments in countering hostile disinformation operations targeting their citizens.

Among other indicators of the scale of its operations is that NewsGuard’s apolitical and transparent criteria have been applied by its analysts to rate news sources accounting for 95% of online engagement with news across nine countries.